- cross-posted to:

- Technology@programming.dev

- cross-posted to:

- Technology@programming.dev

cross-posted from: https://programming.dev/post/36236133

Good, use it for that. It fucking sucks at art.

Different kind of ai. This is the very useful analytical kind.

this is bullshit.

the study was performed by Navinci Diagnostics, which has a vested interest in the use of technological diagnostic tools like AI.

the only way to truly identify cancer is through physical examination and tests. instead of wasting resources on AI we should improve early detection through improved efficiency of tests, allowing patients to regularly test more often and cheaper.

But I wouldn’t count on miracles, these freaks at the top will use this AI for their own vile purposes.

This is pattern recognition actual AI. Not LLM plagiarism code

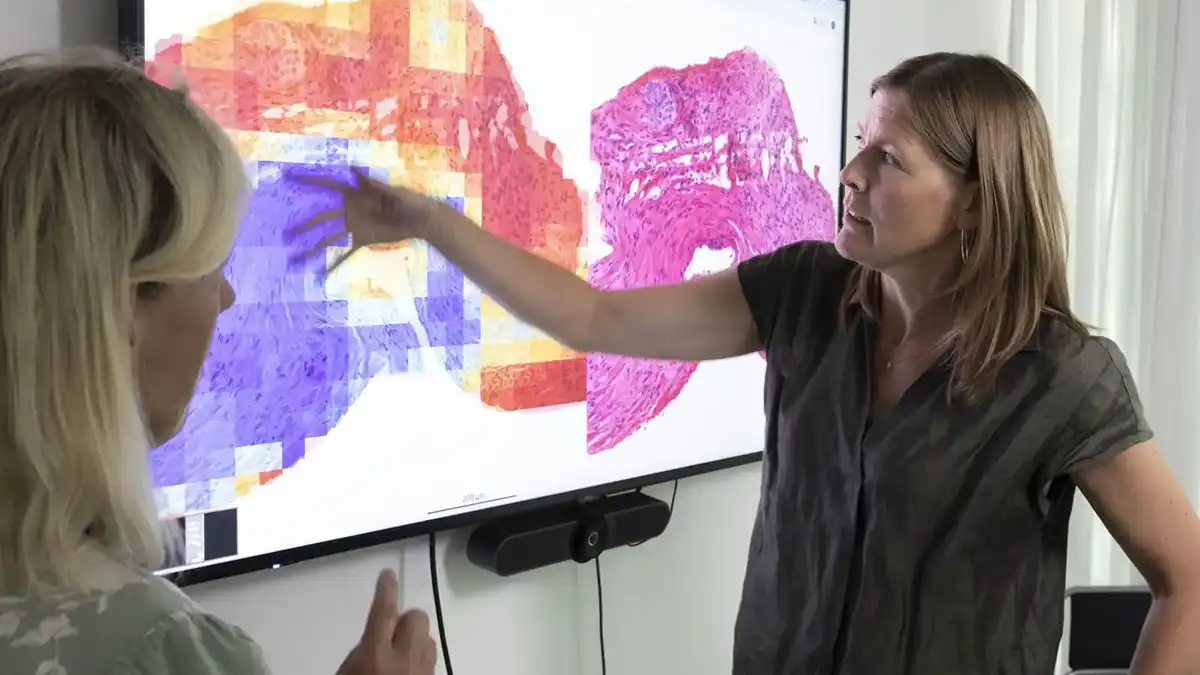

From the article: " All 232 men in the study were assessed as healthy when their biopsies were examined by pathologists. After less than two-and-a-half years, half of the men in the study had developed aggressive prostate cancer…"

HALF? I’d suggest staying away from that study … either they don’t know what they’re doing, or some AI made up that article…

From the peer-reviewed paper: “This study examined if artificial intelligence (AI) could detect these morphological clues in benign biopsies from men with elevated prostate-specific antigen (PSA) levels to predict subsequent diagnosis of clinically significant PCa within 30 months”… so yes, these were men who all had high cancer risk.

Well, it’s likely that AI is creating these articles. We’re just like in 1984…

Maybe they specifically picked men with increased risk?

Half sounds pretty nuts otherwise.

Yes they did. It says so in the article.

ba dum tss

This is what machine learning is supposed to be. Specialized models that solve a specific problem. Refreshing to read about some real AI research

Yeah, this is a typical place for AI to actually shine and we hear almost nothing about it because making fake porn videos of your daughter in law is somehow more important

I feel like in an ideal world, people can be using AI to help the quality of their work. Rather than being replaced by AI itself.

We live in a world where the strong take the last food from the weak in order to live even more luxuriously, because luxury, so to speak, is created through stolen or simply slave labor.

In short, the rich are rich only because they exploit the poor or simply slaves, otherwise they would be beggars or middle class.

Who is downvoting progress in Cancer identification?

After reading the actual published science paper referenced in the article, I would downvote the article because the title is clickbaity and does not reflect the conclusions of the paper. The title suggests that AI could replace pathologists, or that pathologists are inept. This is not the case. Better title would be “Pathologists use AI to determine if biopsied tissue samples contain markers for cancerous tissue that is outside the biopsied region.”

Lemmings with knee-jerk reactions to anything AI related

Ohhh, this 100%

I just posted a plaque imaging study using AI analysis showing people eating the carnivore diet reversing plaque buildup by doing over a year of a strict ketogenic diet.

People I could have offended

- AI

- diet zealots

- anti-keto reactionaries

- CICO advocates

But instead I used a name without any of the trigger words and they missed it

We could rewrite this headline as:

Advanced identification techniques let doctors diagnose cancer earlier saving lives!

where is this study? i did a brief look through your post history but you post so much keto/carnivore stuff it’s hard to spot. it’s easy to jump on the downvote persecution bandwagon without linking to it.

The original study: [Paper] - Plaque Begets Plaque, ApoB Does Not: Longitudinal Data From the KETO-CTA Trial - 2025

The update with new AI imaging: New KETO-CTA Data - Clarification and Update on Cleerly

These didn’t really get downvoted because the trigger words were avoided, and the communities are actively pruned of disinterested people, if you are looking for downvote brigading I could dig up examples for you

And someone immediately downvoted you

Yeah, lemmy can be very emotional!

Trying to keep a community on topic without that level of gut reaction is a sisyphean task https://discuss.online/modlog/696952?page=1&actionType=ModBanFromCommunity but i try anyway

13 of 20 bans for “Reason: Sockpuppet”. looks like some good modding you do over there.

Thank you! Identifying sockpuppet accounts took some doing, but it has been really a fun adventure. Here is my moderation policy if you want the details https://hackertalks.com/post/13655318

Thank you for your organic downvotes!

Actually its 30 sockpuppet identifications so far.

Subscribed!

I was about to post a comment: Finally a use for AI that feels justified to spend energy on.

There was also a study going around claiming that llms caused cancer screenings by humans to decrease in accuracy. I’m not a scientist but I’m pretty sure the sample size was super small and localized in one hospital?

Anyway maybe they’re remembering that in addition to the automatic AI hating down votes.

Not that I’m a fan of AI being shoved everywhere but this isn’t that

Why would you use a large language model to examine a biopsy?

These should be specialized models trained off structured data sets, not the unbridled chaos of an LLM. They’re both called “AI”, but they’re wildly different technologies.

It’s like criticizing a doctor for relying on an air conditioner to keep samples cool when I fact they used a freezer, simply because the mechanism of refrigeration is similar.

But the title had “AI” in it.

Also to answer your question: https://lemvotes.org/post/programming.dev/post/36238264

A lot of people don’t realize that votes are public 🤓

Well, it would be logical to say that anonymity is a threat. Plus, it makes it easier to block thought-criminals if they become a threat… :3

What anonymity, don’t joke with me here.

RFK

Cancer causes autism

I thought the article was telling an unmarried woman that AI can find the cancer pathologists she’s been looking for. Not sure why they would be hiding.

Don’t quit your day job.