Imagine how much more they could’ve just paid employees.

Nah. Profits are growing, but not as fast as they used to. Need more layoffs and cut salaries. That’ll make things really efficient.

Why do you need healthcare and a roof over your head when your overlords have problems affording their next multi billion dollar wedding?

I really understand this is a reality, especially in the US, and that this is really happening, but is there really no one, even around the world, who is taking advantage of laid-off skilled workforce?

Are they really all going to end up as pizza riders or worse, or are there companies making a long-term investment in workforce that could prove useful for different uses in the short AND long term?

I am quite sure that’s what Novo Nordisk is doing with their hire push here in Denmark, as long as the money lasts, but I would be surprised no one is doing it in the US itself.

My theory is the money-people (VCs, hedge-fund mangers, and such) are heavily pushing for offshoring of software engineering teams to places where labor is cheap. Anecdotally, that’s what I’ve seen personally; nearly every company I’ve interviewed with has had a few US developers leading large teams based in India. The big companies in the business domain I have the most experience with are exclusively hiring devs in India and a little bit in Eastern Europe. There’s a huge oversupply of computer science grads in India, so many are so desperate they’re willing to work for almost nothing just to get something on their resume and hopefully get a good job later. I saw one Indian grad online saying he had 2 internship offers, one offering $60 USD/month, and the other $30/month. Heard offshore recruitment services and Global Capability Centers are booming right now.

You misspelled “shares they could have bought back”

It’s as if it’s a bubble or something…

sigh

Dustin’ off this one, out from the fucking meme archive…

https://youtube.com/watch?v=JnX-D4kkPOQ

Millenials:

Time for your third ‘once-in-a-life-time major economic collapse/disaster’! Wheeee!

Gen Z:

Oh, oh dear sweet summer child, you thought Covid was bad?

Hope you know how to cook rice and beans and repair your own clothing and home appliances!

Gen A:

Time to attempt to learn how to think, good luck.

Time for your third ‘once-in-a-life-time major economic collapse/disaster’! Wheeee!

Wait? Third? I feel like we’re past third. Has it only been three?

Dot com bubble, the great recession, covid. So yeah, that would be the fourth coming up.

You can also use 9/11 + GWOT in place of the dotcom bubble, for ‘society reshaping disaster crisis’

So uh, silly me, living in the disaster hypercapitalism ers, being so normalized to utterly.world redefining chaos at every level, so.often, that i have lost count.

That is more American focused though. Sure I heard about 9/11 but I was 8 and didn’t really care because I wanted to go play outside.

True, true, sorry, my America-centrism is showing.

Or well, you know, it was a formative and highly traumatic ‘core memory’ for me.

And, at the time, we were the largest economy in the world, and that event broke our collective minds, and reoriented that economy, and our society, down a dark path that only ended up causing waste, death and destruction.

Imagine the timeline where Gore won, not Bush, and all the US really did was send in a specops team to Afghanistan to get Bin Laden, as opposed to occupy the whole country, never did Iraq 2.

Thats… a lot of political capital and money that could have been directed to… anything else, i dunno, maybe kickstarting a green energy push?

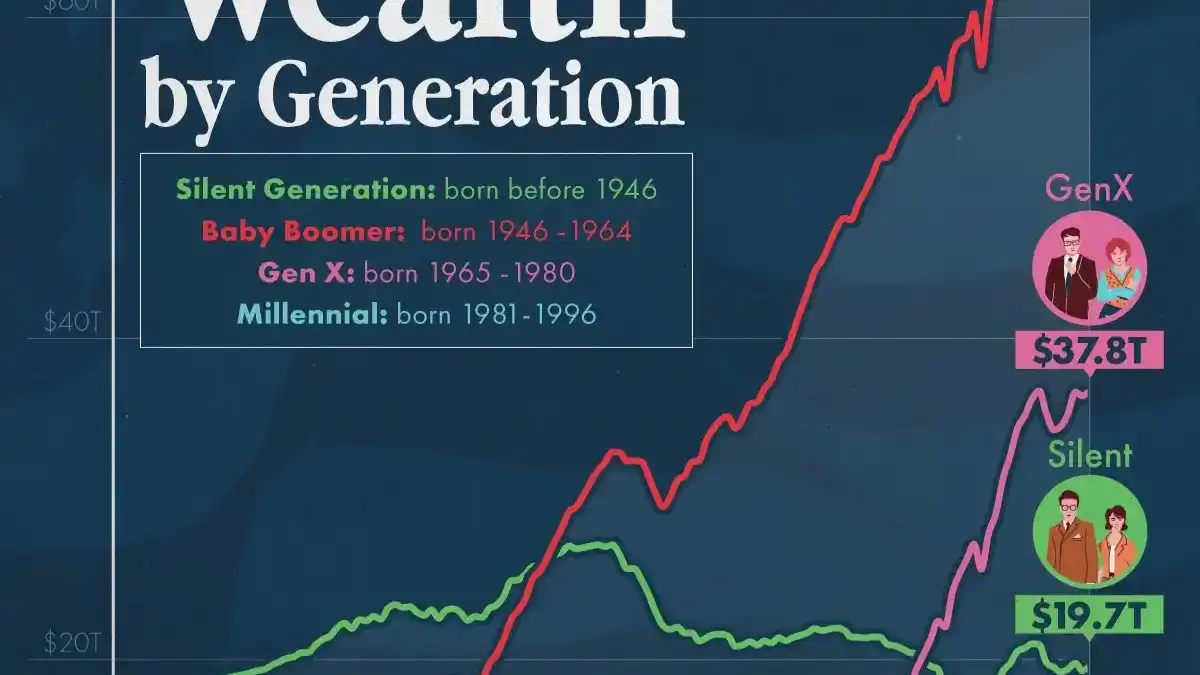

Wait for Gen X to pop in as usual and seek attention with some “we always get ignored” bullshit.

Who cares what Gen X thinks, they have all the money.

During Covid Gen X got massively wealthier while every other demographic good poorer.

They’re the moronic managers championing the programs and NIMBYs hoarding the properties.

Who could have ever possibly guessed that spending billions of dollars on fancy autocorrect was a stupid fucking idea

This comment really exemplifies the ignorance around AI. It’s not fancy autocorrect, it’s fancy autocomplete.

It’s fancy autoincorrect

Fancy autocorrect? Bro lives in 2022

EDIT: For the ignorant: AI has been in rapid development for the past 3 years. For those who are unaware, it can also now generate images and videos, so calling it autocorrect is factually wrong. There are still people here who base their knowledge on 2022 AIs and constantly say ignorant stuff like “they can’t reason”, while geniuses out there are doing stuff like this: https://xcancel.com/ErnestRyu/status/1958408925864403068

EDIT2: Seems like every AI thread gets flooded with people with showing age who keeps talking about outdated definitions, not knowing which system fits the definition of reasoning, and how that term is used in modern age.

I already linked this below, but for those who want to educate themselves on more up to date terminology and different reasoning systems used in IT and tech world, take a deeper look at this: https://en.m.wikipedia.org/wiki/Reasoning_system

I even loved how one argument went “if you change underlying names, the model will fail more often, meaning it can’t reason”. No, if a model still manages to show some success rate, then the reasoning system literally works, otherwhise it would fail 100% of the time… Use your heads when arguing.

As another example, but language reasoning and pattern recognition (which is also a reasoning system): https://i.imgur.com/SrLX6cW.jpeg answer; https://i.imgur.com/0sTtwzM.jpeg

Note that there is a difference between what the term is used for outside informational technologies, but we’re quite clearly talking about tech and IT, not neuroscience, which would be quite a different reasoning, but these systems used in AI, by modern definitions, are reasoning systems, literally meaning they reason. Think of it like Artificial intelligence versus intelligence.

I will no longer answer comments below as pretty much everyone starts talking about non-IT reasoning or historical applications.

You do realise that everyone actually educated in statistical modeling knows that you have no idea what you’re talking about, right?

Note that I’m not one of the people talking about it on X, I don’t know who they are. I just linked it with a simple “this looks like reasoning to me”.

Yes, your confidence in something you apparently know nothing about is apparent.

Have you ever thought that openai, and most xitter influencers, are lying for profit?

They can’t reason. LLMs, the tech all the latest and greatest still are, like GPT5 or whatever generate output by taking every previous token (simplified) and using them to generate the most likely next token. Thanks to their training this results in pretty good human looking language among other things like somewhat effective code output (thanks to sites like stack overflow being included in the training data).

Generating images works essentially the same way but is more easily described as reverse jpg compression. You think I’m joking? No really they start out with static and then transform the static using a bunch of wave functions they came up with during training. LLMs and the image generation stuff is equally able to reason, that being not at all whatsoever

You partly described reasoning tho

If you truly believe that you fundamentally misunderstand the definition of that word or are being purposely disingenuous as you Ai brown nose folk tend to be. To pretend for a second you genuinely just don’t understand how to read LLMs, the most advanced “Ai” they are trying to sell everybody is as capable of reasoning as any compression algorithm, jpg, png, webp, zip, tar whatever you want. They cannot reason. They take some input and generate an output deterministically. The reason the output changes slightly is because they put random shit in there for complicated important reasons.

Again to recap here LLMs and similar neural network “Ai” is as capable of reasoning as any other computer program you interact with knowingly or unknowingly, that being not at all. Your silly Wikipedia page is a very specific term “Reasoning System” which would include stuff like standard video game NPC Ai such as the zombies in Minecraft. I hope you aren’t stupid enough to say those are capable of reasoning

Wtf?

Do I even have to point out the parts you need to read? Go back and start reading at sentence that says “In typical use in the Information Technology field however, the phrase is usually reserved for systems that perform more complex kinds of reasoning.”, and then check out NLP page, or part about machine learning, which are all seperate/different reasoning systems, but we just tend to say “reasoning”.

Not your hilarious NPC anology.

This link is about reasoning system, not reasoning. Reasoning involves actually understanding the knowledge, not just having it. Testing or validating where knowledge is contradictionary.

LLM doesn’t understand the difference between hard and soft rules of the world. Everything is up to debate, everything is just text and words that can be ordered with some probabilities.

It cannot check if something is true, it just ‘knows’ that someone on the internet talked about something, sometimes with and often without or contradicting resolutions…

It is a gossip machine, that trys to ‘reason’ about whatever it has heard people say.

This comment, summarising the author’s own admission, shows AI can’t reason:

this new result was just a matter of search and permutation and not discovery of new mathematics.

I never said it discovered new mathematics (edit: yet), I implied it can reason. This is clear example of reasoning to solve a problem

You need to dig deeper of how that “reasoning” works, but you got misled if you think it does what you say it does.

Can you elaborate? How is this not reasoning? Define reasoning to me

Deep research independently discovers, reasons about, and consolidates insights from across the web. To accomplish this, it was trained on real-world tasks requiring browser and Python tool use, using the same reinforcement learning methods behind OpenAI o1, our first reasoning model. While o1 demonstrates impressive capabilities in coding, math, and other technical domains, many real-world challenges demand extensive context and information gathering from diverse online sources. Deep research builds on these reasoning capabilities to bridge that gap, allowing it to take on the types of problems people face in work and everyday life.

While that contains the word “reasoning” that does not make it such. If this is about the new “reasoning” capabilities of the new LLMS. It was if I recall correctly, found our that it’s not actually reasoning, just doing a fancy footwork appear as if it was reasoning, just like it’s doing fancy dice rolling to appear to be talking like a human being.

As in, if you just change the underlying numbers and names on a test, the models will fail more often, even though the logic of the problem stays the same. This means, it’s not actually “reasoning”, it’s just applying another pattern.

With the current technology we’ve gone so far into this brute forcing the appearance of intelligence that it is becoming quite the challenge in diagnosing what the model is even truly doing now. I personally doubt that the current approach, which is decades old and ultimately quite simple, is a viable way forwards. At least with our current computer technology, I suspect we’ll need a breakthrough of some kind.

But besides the more powerful video cards, the basic principles of the current AI craze are the same as they were in the 70s or so when they tried the connectionist approach with hardware that could not parallel process, and had only datasets made by hand and not with stolen content. So, we’re just using the same approach as we were before we tried to do “handcrafted” AI with LISP machines in the 80s. Which failed. I doubt this earlier and (very) inefficient approach can solve the problem, ultimately. If this keeps on going, we’ll get pretty convincing results, but I seriously doubt we’ll get proper reasoning with this current approach.

But pattern recognition is literally reasoning. Your argument sounds like “it reasons, but not as good as humans, therefore it does not reason”

I feel like you should take a look at this: https://en.m.wikipedia.org/wiki/Reasoning_system

Thank god they have their metaverse investments to fall back on. And their NFTs. And their crypto. What do you mean the tech industry has been nothing but scams for a decade?

Imagine what the economy would look like if they spent 30 billion on wages.

If we’re just talking about the USA, then the ~200 million working people would get $150 each.

Does the 30 billion also account for allocated resources (such as the incredibly demanding amount of electricity required to run a decent AI for millions if not billions of future doctors and engineers to use to pass exams)?

Does it account for the future losses of creativity & individuality in this cesspool of laziness & greed?

We could always just confiscate all fortunes over 900 million dollars.

The 5 richest billionaires have a combined $1.154 trillion, which divided by $340 million gives us $3,394 per American citizen. That’s literally just the top 5. According to Forbes there were 813 billionaires in 2024. Sounds pretty damned substantial to me. We’re talking life-altering amounts of money for every American without even glancing in the direction of mere hundred-millionaires. And all the billionaires could still be absurdly wealthy.

This is where the problem of the supply/demand curve comes in. One of the truths of the 1980s Soviet Union’s infamous breadlines wasn’t that people were poor and had no money, or that basic goods (like bread) were too expensive — in a Communist system most people had plenty of money, and the price of goods was fixed by the government to be affordable — the real problem was one of production. There simply weren’t enough goods to go around.

The entire basic premise of inflation is that we as a society produce X amount of goods, but people need X+Y amount of goods. Ideally production increases to meet demand — but when it doesn’t (or can’t fast enough) the other lever is that prices rise so that demand decreases, such that production once again closely approximates demand.

This is why just giving everyone struggling right now more money isn’t really a solution. We could take the assets of the 100 richest people in the world and redistribute it evenly amongst people who are struggling — and all that would happen is that there wouldn’t be enough production to meet the new spending ability, so so prices would go up. Those who control the production would simply get all their money back again, and we’d be back to where we started.

Of course, it’s only profitable to increase production if the cost of basic inputs can be decreased — if you know there is a big untapped market for bread out there and you can undercut the competition, cheaper flour and automation helps quite a bit. But if flour is so expensive that you can’t undercut the established guys, then fighting them for a small slice of the market just doesn’t make sense.

Personally, I’m all for something like UBI — but it’s only really going to work if we as a society also increase production on basic needs (housing, food, clothing, telecommunications, transit, etc.) so they can be and remain at affordable prices. Otherwise just having more money in circulation won’t help anything — if anything it will just be purely inflationary.

There are more empty homes than homeless in the US. I’ve seen literal tons of food and clothing go right to the dump to protect profit margins.

Do you have any sources to back up the claim that we need to make more shit?

We could take the assets of the 100 richest people in the world and redistribute it evenly amongst people who are struggling — and all that would happen is that there wouldn’t be enough production to meet the new spending ability, so so prices would go up. Those who control the production would simply get all their money back again, and we’d be back to where we started.

Then we should do that over and over again.

This is not true. We have enough production. Wtf are people throwing away half their plates at restaurants? Why does one rich guy live in a mansion? The super rich consume more than people realize. You are wrong on so many levels that I do not know where to start. You sound like a bot billionaire shill.

We have enough production in some areas — but not in others. Some goods are currently overly expensive because the inputs are expensive — mostly because we’re not producing enough. In many cases that’s due to insufficient competition. And there are some significant entrenched interests trying to keep things that way (lower production == lower competition == higher prices).

And FWIW, the US’s current “tariff everything and everybody” approach is going to make this much, much, much worse.

I am certainly not the friend of billionaires. I’m perfectly fine with a wealth tax to fund public works and services. All I’m against is overly simplistic solutions which just exacerbate existing problems.

You sound like a dad after reading the morning press.

You are repeating indoctrinated capitalist think patterns. In reality the market most often does not react like that.

The example as given by you is how you basically teach the concept of market balance to middle schoolers. However, it’s a hypotetical lab analogy. It’s over simplified for lay people. Comparable to the famous “ignore air resistance” in physics.

Markets are at times efficient, at other times inefficient. They may even be both concurrently.

First, economists do not believe that the market solves all problems. Indeed, many economists make a living out of analyzing “market failures” such as pollution in which laissez faire policy leads not to social efficiency, but to inefficiency.

Like our colleagues in the other social and natural sciences, academic economists focus their greatest energies on communicating to their peers within their own discipline. Greater effort can certainly be given by economists to improving communication across disciplinary boundaries

In the real world, it is not possible for markets to be perfect due to inefficient producers, externalities, environmental concerns, and lack of public goods.

My experience with AI so far is that I have to waste more time fine tuning my prompt to get what I want and still end up with some obvious issues that I have to manually fix and the only way I would know about these issues is my prior experience which I will stop gaining if I start depending on AI too much, plus it creates unrealistic expectations from employers on execution time, it’s the worst thing that has happened to the tech industry, I hate my career now and just want to switch to any boring but stable low paying job if I don’t have to worry about going through months for a job hunt

Similar experience here. I recently took the official Google “prompting essentials” course. I kept an open mind and modest expectations; this is a tool that’s here to stay. Best to just approach it as the next Microsoft Word and see how it can add practical value.

The biggest thing I learned is that getting quality outputs will require at least a paragraph-long, thoughtful prompt and 15 minutes of iteration. If I can DIY in less than 30 minutes, the LLM is probably not worth the trouble.

I’m still trying to find use cases (I don’t code), but it often just feels like a solution in search of a problem….

Sounds like we all just wamt to retire as goat farmers. Just like before. The more things change…they say

Does this mean they’ll invest the money in paying workers? No… they’re just have to double down.

They’ll happily burn mountains of profits on that stuff, but not on decent wages or health insurance.

Wages or health insurance are a very known cost, with a known return. At some point the curve flattens and the return gets less and less for the money you put in. That means there is a sweet spot, but most companies don’t even want to invest that much to get to that point.

AI however, is the next new thing. It’s gonna be big, huge! There’s no telling how much profit there is to be made!

Because nobody has calculated any profits yet. Services seem to run at a loss so far.

However, everybody and their grandmother is into it, so lots of companies feel the pressure to do something with it. They fear they will no longer be relevant if they don’t.

And since nobody knows how much money there is to be made, every company is betting that it will be a lot. Where wages and insurance are a known cost/investment with a known return, AI is not, but companies are betting the return will be much bigger.

I’m curious how it will go. Either the bubble bursts or companies slowly start to realise what is happening and shift their focus to the next thing. In the latter case, we may eventually see some AI develop that is useful.

Some of them won’t even pay to replace broken office chairs for the employees they forced to RTO.

Once again we see the Parasite Class playing unethically with the labour/wealth they have stolen from their employees.

Losing money is a called going into debt, not just zero returns.

We could have housed and fed every homeless person in the US. But no, gibbity go brrrr

Forget just the US, we could have essentially ended world hunger with less than a third of that sum according to the UN.

Could’ve told them that for $1B.

Heck, I’da done it for just 1% of that.

Still $10m… ffs. Nobody needs $1B

Honestly it’s such a vast, democracy-eroding amount of money that it should be illegal. It’s like letting an individual citizen own a small nuke.

Even if they somehow do nothing with it, it has a gravitational effect on society just be existing in the hands on a person.

I have no proof, but I feel like the AI push and Turnip getting re-elected and his regression of the EPA rules sounds like this whole AI thing was an excuse to burn more fossil fuels.

If I was invested in AI, and considering AI’s thirst for electricity, I would absolutely make a similar investment in energy. That way, as the AI server farms suck up the electricity I would get at least some of that money back from the energy market.